Arthur Brasseur

Senior Associate

Isabel Young

Senior Associate

Augustin Collot

Analyst

Our first blog in this series discussed how robotics has long been confined to narrow applications, but a new convergence of AI, hardware, and software is finally breaking those limits. Now we’ll look at why nations are competing for robotics dominance, and what’s inside the machines shaping the future.

A market on the move

Over the past decade, robotics adoption has followed a generally steady, linear trajectory. In industrial settings, the number of robots per 10k manufacturing employees tripled between 2013 and 2022, reflecting the gradual integration of automation into factory workflows. In the consumer market, adoption has also risen sharply, with cumulative home robot shipments surpassing 30m units by 2023, driven largely by devices like robotic vacuum cleaners. These trends point to growing acceptance and utility of robots in both professional and personal contexts. However, with recent breakthroughs, we may be approaching an inflection point, where adoption accelerates beyond the historical pace.

While adoption has broadened, the geopolitical landscape of robotics is also something to consider, with US and China increasingly leading the race.

- In Europe, robotics progress is uneven. Despite having world-class research institutions and industrial heritage, the continent is hampered by regulation (AI Act and EU Machinery Regulation), insufficient access to growth capital, and slower commercialization cycles. One conclusion of the Draghi report is that, to remain competitive, significant funding is needed in Europe in new technologies such as AI, quantum computing, but also robotics, as well as deregulation.

- In sharp contrast, China is quickly establishing itself as a global powerhouse in robotics, driven by a combination of government policy, industrial strength, and rapid progress in AI. The country is also responding to rising wage inflation, which has put pressure on its traditional low-cost manufacturing advantage. Robotic automation is seen as a strategic solution, and steps are being taken to accelerate adoption. Central and local governments offer subsidies and R&D incentives to local manufacturers to help them automate – circa 17.5% of the equipment cost for robot purchases. Across the country, dozens of robotics startups are developing advanced systems with capabilities such as computer vision and autonomous navigation. These robots are being deployed in diverse sectors like manufacturing and logistics. Major manufacturers such as Foxconn have integrated robots into their operations, and several robotics firms have gone public, including Horizon Robotics. This growth also reflects the strategic aspect of maintaining industrial competitiveness in a higher-wage economy.

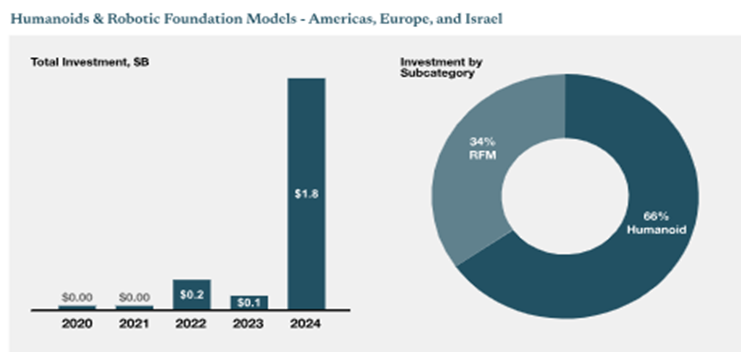

- Meanwhile, the US continues to lead in next-generation robotics, driven by a convergence of top-tier academic institutions, mature capital markets, and strong entrepreneurial ecosystems. The US robotics and AI market reached an estimated $5bn in 2024, and is expected to grow at a 22% CAGR, reaching nearly $39bn by 2034. American firms are particularly dominant in humanoids and robotic foundational models, which have attracted massive funding rounds. Companies like Figure, 1X, and Physical Intelligence have each raised hundreds of millions of dollars, positioning the US at the forefront of advanced robotics R&D. Big Tech players are also stepping in: Tesla (Optimus) has doubled down on humanoids; Amazon continues to automate logistics; and companies like Google and Meta are actively investing in robotics as part of broader AI strategies. Maintaining the current competitive edge will require continued investment, policy alignment, and clear prioritization at the federal level.

This surge in innovation is matched by record-breaking fundraising activity. The capital-intensive nature of robotics – driven by high R&D costs, talent demands, and complex hardware – has led to large rounds across the board. Notable recent investments include Figure ($675m), Physical Intelligence ($400m), Skild AI ($300m), Agility Robotics ($150m), and 1X ($100m). While many of these companies are still in the early innings, they’ve secured strong investor backing due to the sector’s transformative potential.

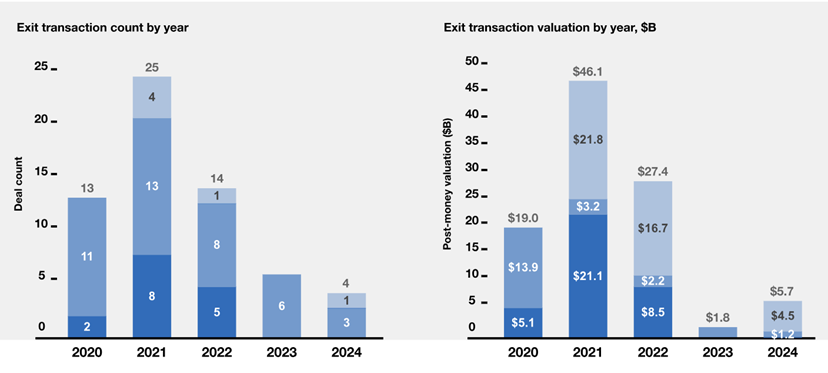

On the M&A side, while the activity has been more muted over the past three years, several high-profile deals signal continued interest from strategic acquirers. Transactions exceeding $250m include the acquisition of Clearpath by Rockwell ($609m), Velodyne by Ouster ($600m), Ghost Robotics by LIG Nex1 ($400m), Dedrone by AXON ($500m), and Aerodome by Flock Safety ($300m). These deals reflect consolidation in specific verticals – particularly autonomy, sensing, and security – where synergies with incumbent players are strongest. Also, it must be noted that all those transactions are in the US.

Source: Robotic Transactions >$25M

In the public markets, listed robotics companies like Procept BioRobotics, Aurora, Symbotic, and Intuitive Surgical command multi-billion-dollar valuations, reflecting investor confidence in the sector’s long-term relevance. These signals point to a market entering a new phase – driven by rapid technological progress, geopolitical competition, and increased capital availability.

While adoption has historically been gradual, the growing maturity of the ecosystem – from cutting-edge research to national strategies – suggests that robotics is becoming a core pillar of global technological transformation. To understand how this momentum translates into real-world impact, we now turn to the robotics value chain, from the “brain” and “body” of robots to the integrators enabling deployment across industries.

Inside a robot: brains, body, and coordination

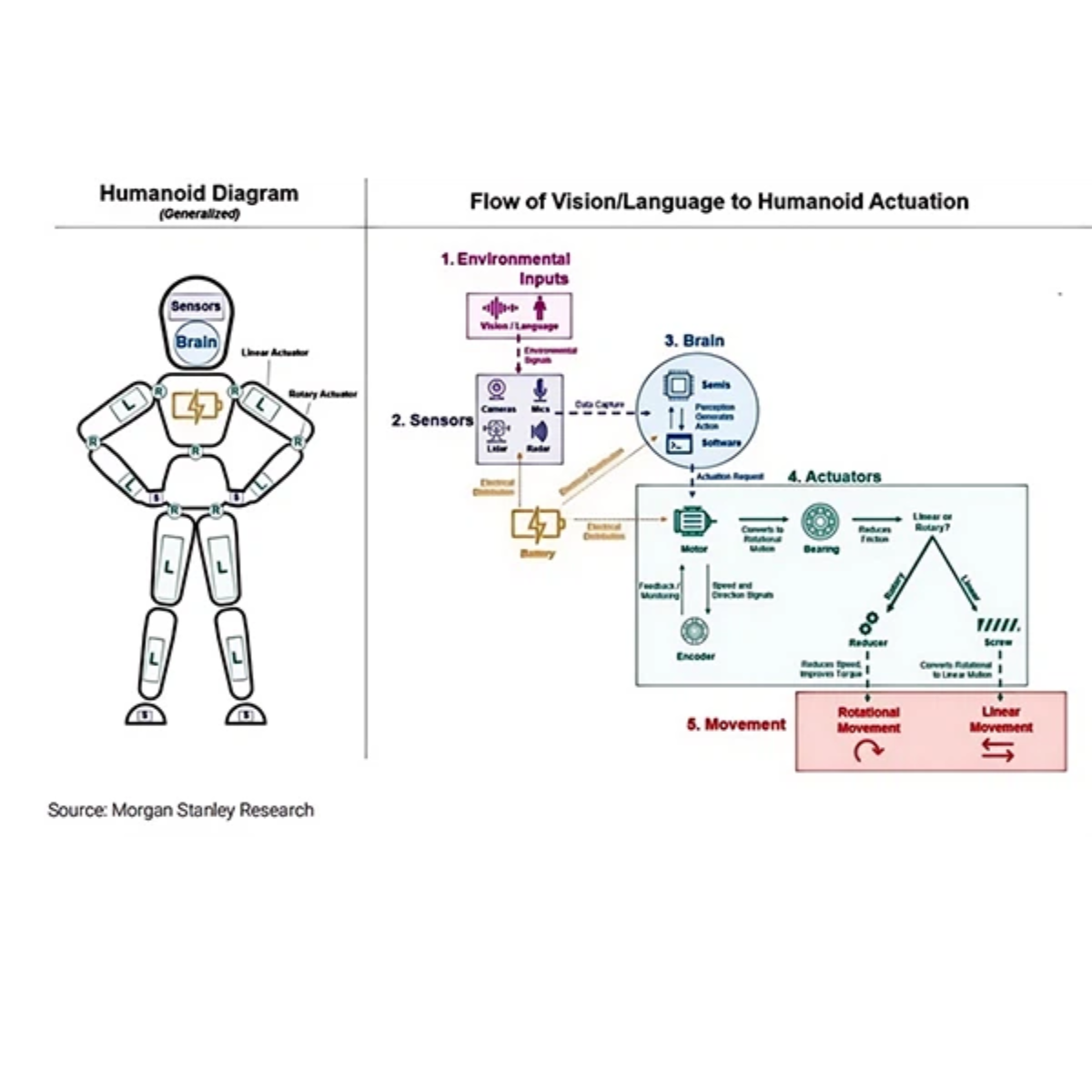

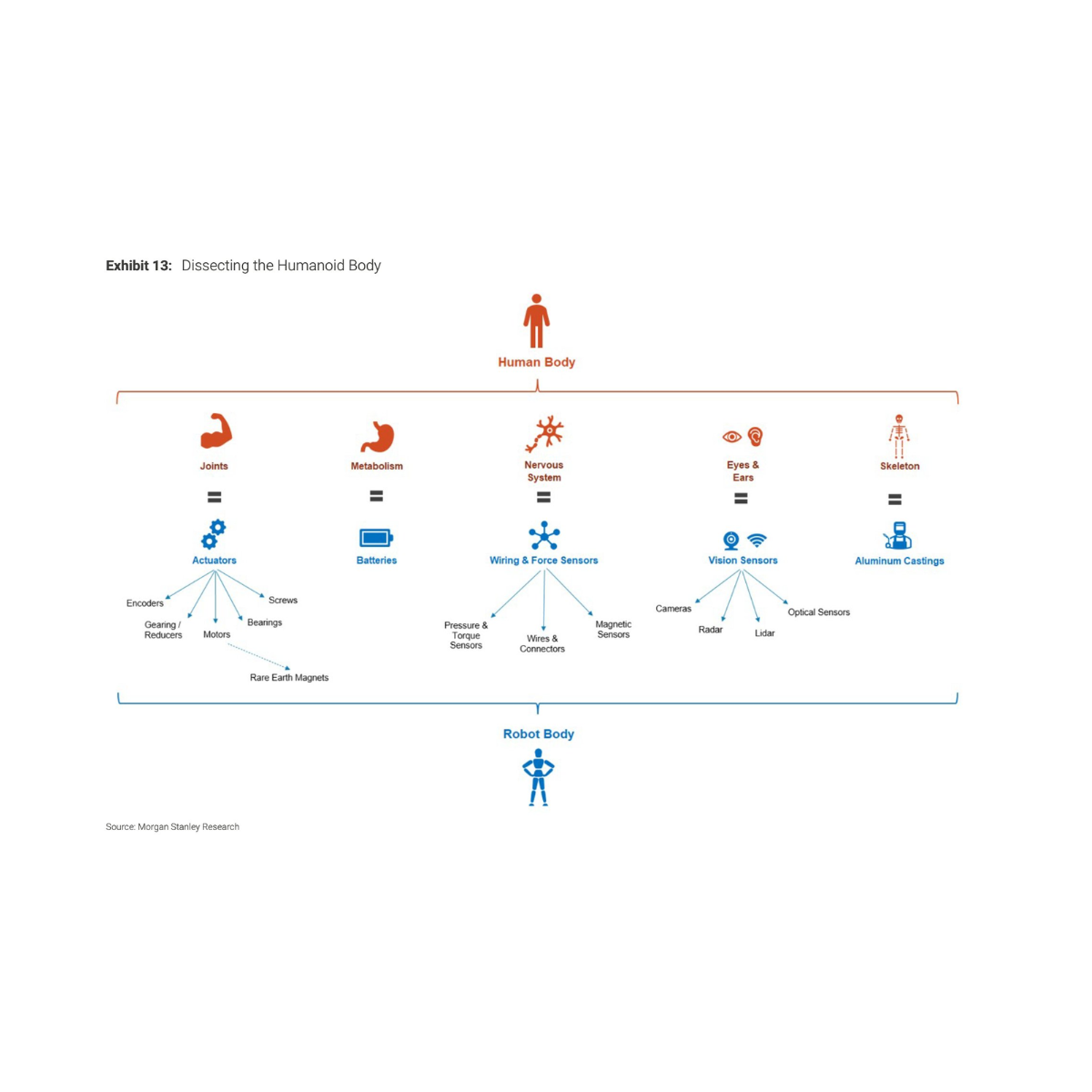

When we think about robots, much attention is placed on the “brain” – the AI models and algorithms that power intelligence. But equally important is the robot body. Like our bodies, robots rely on integrated systems to move, sense, and operate in the physical world. Actuators function as joints and muscles; batteries serve as their metabolism, and sensors replicate the nervous system and senses. Vision modules act as eyes, while aluminum castings form the skeleton. This physical layer is critical.

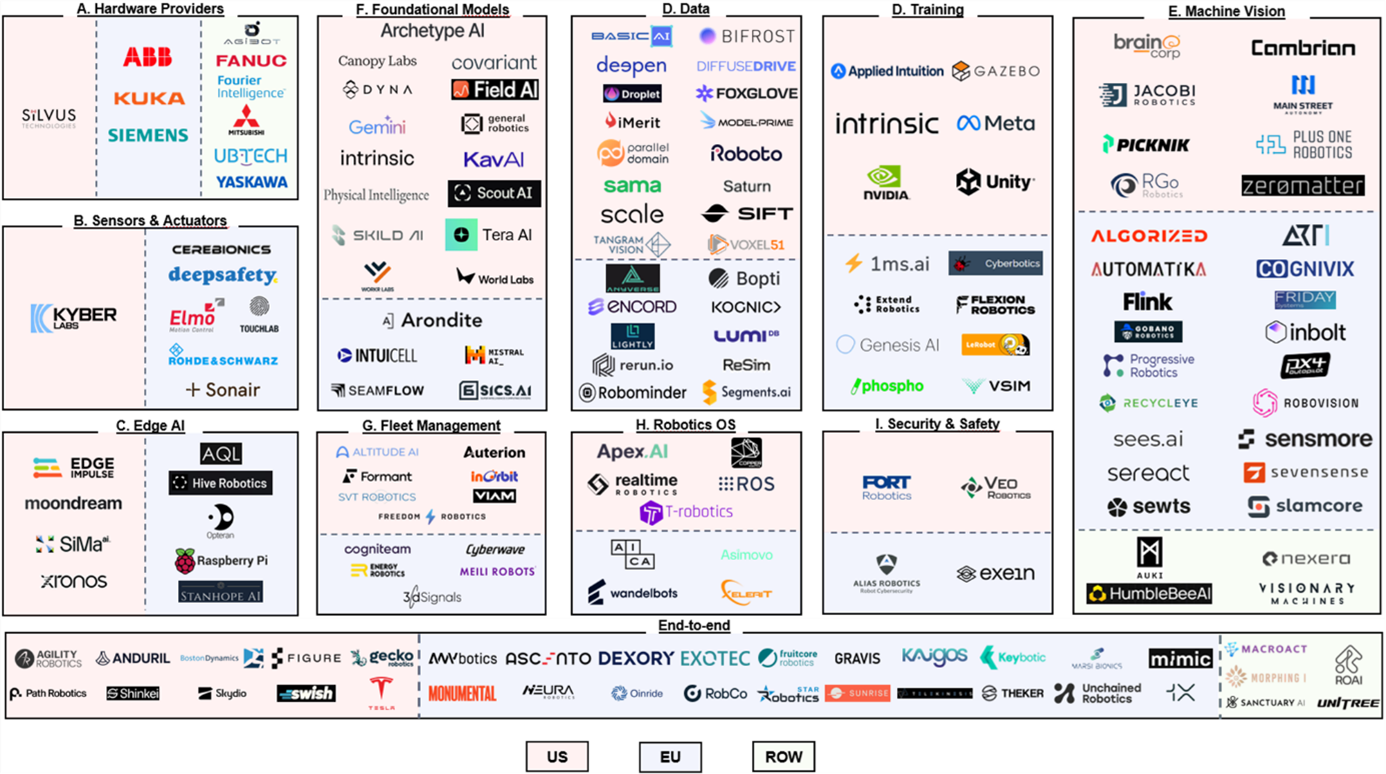

A. Hardware Providers

Hardware providers like FANUC, KUKA, and ABB have played a central role in the development of industrial automation, supplying robotic arms and motion systems widely adopted in manufacturing environments. Their hardware remains a key enabler for many robotics applications today, particularly in sectors like automotive. However, these systems were historically designed for repeatable, pre-programmed tasks and are only gradually evolving to accommodate the flexibility required by AI. While their manufacturing capacity gives them a head start, their ability to adapt to defined software will determine how relevant they remain in the next phase.

B. Actuators & Sensors

Actuators and sensors are core blocks that enable robots to move and perceive the world around them. Actuators function like muscles, converting electrical energy into motion to support tasks such as walking or grasping. Startups like Kyber Labs are developing artificial muscle fiber actuators that replicate the elasticity and responsiveness of human muscle, aiming to deliver more natural and precise movement at lower cost. On the sensing side, robots rely on a mix of technologies – cameras, LiDARs, radars, IMUs, and odometers – to understand their surroundings. Sonair, for example, uses ultrasound to provide omnidirectional 3D depth sensing, while Cerebionics is developing implantable brain–computer interfaces that could one day bridge neural activity with robotic control. These next-generation actuators and sensors are helping close the gap between robotic and human capabilities in real-world environments.

C. Edge AI: Real-time autonomy at the edge

As robots transition into real-world settings, the need for local, real-time processing is critical. Edge AI enables robots to make decisions without relying on cloud – ensuring low latency, greater reliability, and better data privacy. This is fueling the rise of “Tiny AI”: modular, affordable task-specific devices, and privacy-preserving. Startups like Edge Impulse provide a platform for building and deploying ML models on edge; Sima.ai offers high-performance edge AI chips; Stanhope AI focuses on private inference that runs entirely offline; Opteran mimics insect brains to deliver lightweight, energy-efficient autonomy; and Hive Robotics uses edge AI to command, control, and swarm robots. They are contributing to the growing adoption of edge AI solutions in robotics.

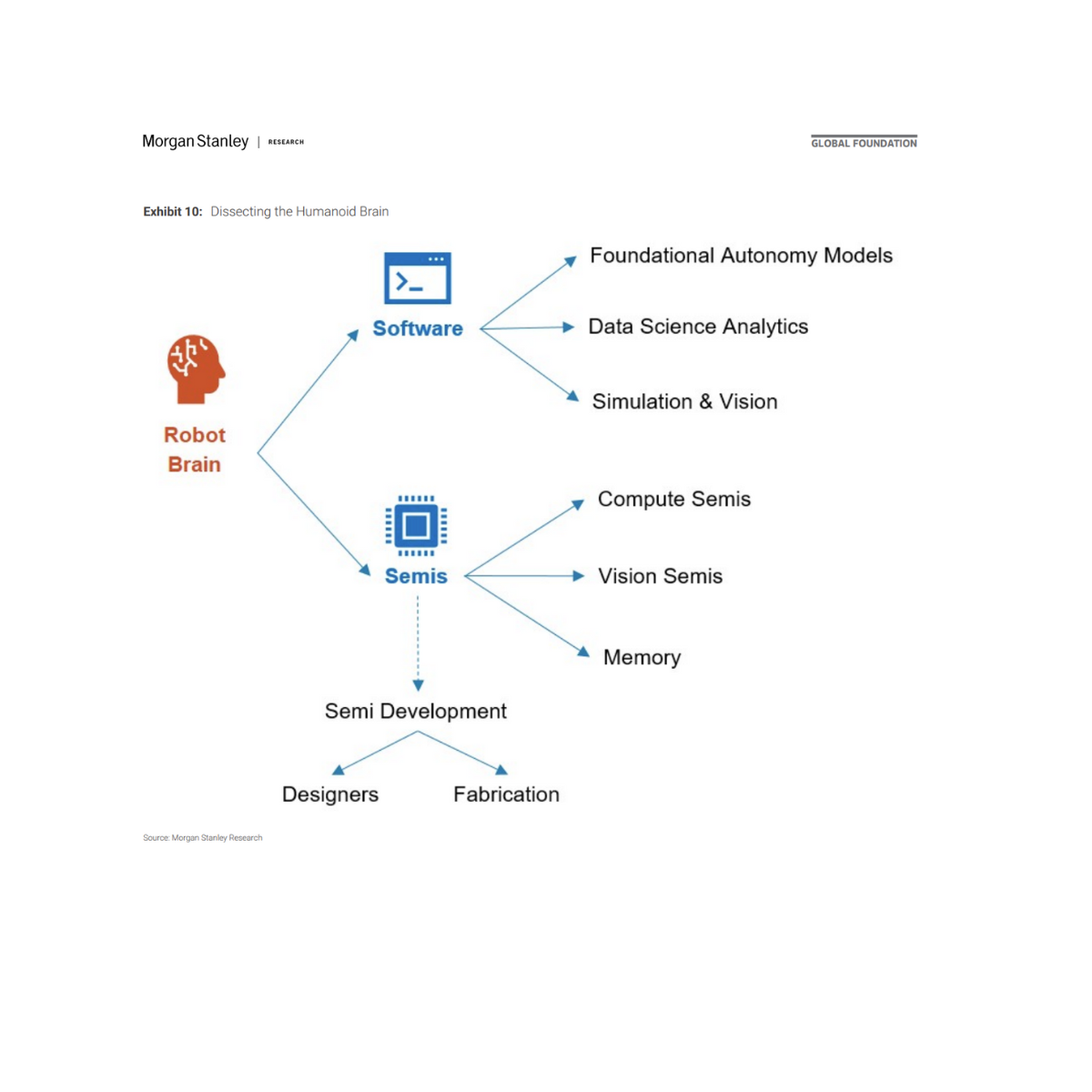

As the hardware part continues to evolve – bringing robots closer to human-level perception and motion – they lay the foundation for more capable and intelligent machines. But it’s the software stack layered on top of this hardware that truly unlocks autonomy. From data collection and training to machine vision, software is what enables robots to operate in dynamic, real-world environments. The following sections explore the key software layers driving this transformation.

D. Data & Training: The foundation of learning

High-quality, diverse data is essential for teaching robots how to act in the real world and involves several critical steps. Startups like Anyverse generate high-quality synthetic data tailored to robotic perception, while Sensei Robotics provides an outsourced training data collection. Complementing these, Encord offers a multimodal AI data platform to label, curate, and manage datasets; ReSim enables scalable, cloud-based virtual testing of robotics and autonomy systems; and Rerun delivers an open-source visualization and data stack for logging and analyzing multimodal robotics and vision data in real time. Together, these companies are building the infrastructure needed to accelerate reliable robotics development. Several data collection approaches are being explored today:

- Teleoperation, where humans control robots via joysticks or haptic devices, offers high-quality demonstrations but is hard to scale.

- AR-based data allows operators to guide robots in augmented reality environments, but adoption remains limited by headset usage.

- Simulation environments enable rapid iteration and large-scale experimentation, though transferring models to the real world remains a challenge.

- Video learning is a promising but early-stage approach that trains robots using third-person videos such as YouTube, though it suffers from a lack of physical interaction.

Training sits at the heart of robotics progress. To handle real-world complexity, companies rely on advanced simulation and model training techniques. Hyperscalers like NVIDIA (Cosmos) and Meta (PARTNR), and open-source tools like Gazebo and Hugging Face (LeRobot) are shaping foundational infrastructure. Google’s Gemini for the Physical brings embodied reasoning into play, while startups like Genesis are building full-stack training platforms. European players such as Mistral (Les Ministraux), Flexion, Vsim, and Phospho are also pushing the frontier – offering edge optimized models and training infrastructure to help robots reason, learn, and act autonomously in complex environments. It spans multiple methods:

- Reinforcement learning is used to teach robots through trial and error, with success in tasks like dexterous manipulation or dynamic control.

- Imitation learning allows robots to learn from human demonstrations.

- Curriculum learning allows robots to learn tasks in a structured sequence, starting from simple tasks and gradually progressing to more complex ones – inspired by human education.

- Digital twin is a virtual replica of a physical robot and its operating environment, used to simulate behavior, test strategies, and refine performance.

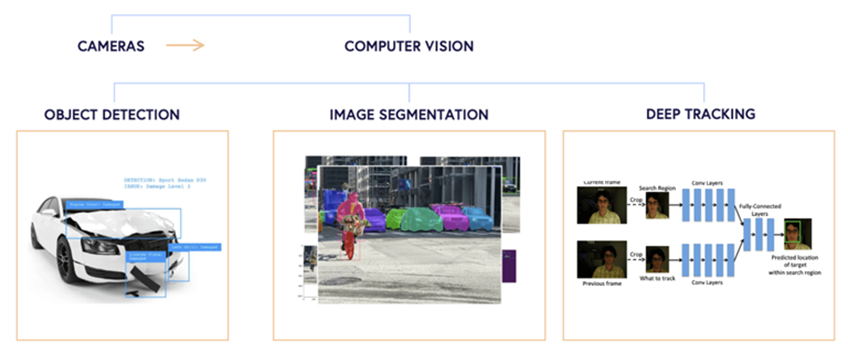

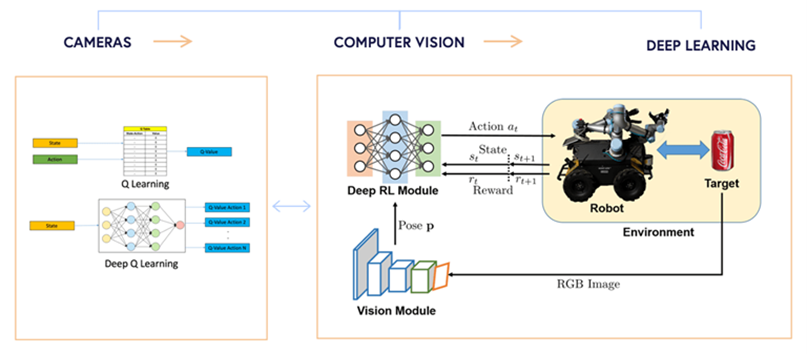

E. Machine vision: Bridging perception and action

Powered by computer vision, sensors (cameras) can now detect and segment objects, track movement, and understand scenes and spatial context. This enables robots to perform essential tasks like navigation and interaction with humans and objects. As vision models improve, robots are increasingly capable of interpreting complex environments with near-human-level perception.

But the extraordinary challenge for robots is to become autonomous. Autonomy in robotics demands not just precise control, but contextual understanding. Deep learning and GenAI provide the neural backbone that enables robots to interpret sensory inputs and respond intelligently. One of the most transformative developments is the rise of VLA models, which link perception (vision), intent (language), and decision-making (action). These models allow robots to follow natural language instructions, perceive visual scenes, and make context-aware decisions – removing traditional programming barriers and enabling intuitive human-robot interaction.

A growing wave of startups is translating these advances into real-world applications. Inbolt enables robots to make real-time visual decisions in dynamic environments, while Sensmore retrofits heavy machinery with embodied AI and 4D radar for collision avoidance and autonomous operation. Flink, spun out of ETH Zurich, provides plug-and-play software that lets robots see, reason, and act within minutes, and Sereact develops AI systems like PickGPT to power zero-shot manipulation and visual tasks in logistics and warehousing.

F. Foundational Models: Toward general-purpose robotics

The move from narrow, task-specific robots to general-purpose embodied intelligence is being driven by foundation models trained on large-scale, multimodal datasets. These models offer: (i) cross-platform generalization – one model can operate across different hardware platforms, (ii) semantic understanding of the physical world – going beyond geometry to reason about intent and function, and (iii) transferability of learning – knowledge gained in one task or domain can be reused elsewhere. Companies like Skild AI and Physical Intelligence are developing large-scale models that can learn from demonstrations, interpret commands, and make autonomous decisions in real time. Arondite provides software to orchestrate autonomous systems, while General Robotics is building general-purpose robotic intelligence with plug-and-play skills. However, challenges remain in aligning these models with physical embodiment.

G. Fleet Management

As robotics deployments scale, especially in logistics and delivery, fleet management systems have become essential for coordinating, monitoring, and optimizing multiple robots in real time. These platforms handle task allocation, traffic control, energy optimization, and predictive maintenance, ensuring that robots operate efficiently as a team. Companies like Formant and InOrbit offer solutions that provide centralized dashboards, analytics, and remote-control capabilities. Effective fleet management is crucial for scaling operations while avoiding collisions, idle time, and inefficiencies.

H. Robotics OS

Robotics OS serve as the foundational software layer that enables robots to perceive, plan, and act by managing how different components – sensors, actuators, algorithms – interact. Much like operating systems in personal computers, these platforms provide middleware to simplify development and allow for modular design. The most widely used framework is ROS, with its more robust successor ROS2, which supports real-time communication, multi-robot systems, and industrial-grade deployments. However, several alternatives are emerging such as Apex.AI or Realtime Robotics.

I. Security & Safety

As robots operate closer to humans and handle sensitive tasks, safety and security are critical enablers of trust and adoption. On the safety side, systems must comply with strict standards to avoid harm through redundancy, fail-safe systems, and real-time monitoring. For security, protecting robots from cyberattacks or data leaks is increasingly important, especially in healthcare and defense. Startups like Fort Robotics and Alias Robotics are building safety and cybersecurity stacks for robotic systems, while Exein embeds AI-powered security at the firmware level — mitigating attacks before they escalate. Regulators are also beginning to define frameworks for certification and compliance across sectors.

Market Map

If you can’t wait for part three, download the full whitepaper below.