Camille Périssère

Senior Associate

Introduction

We recently explored the hidden costs of innovation when we looked at the environmental footprint of Gen AI in our previous blog, but the future doesn’t have to be power-hungry. Through smart infrastructure, new materials, and purposeful deployment, we can shape an AI ecosystem that works with the planet, not against it. In this chapter, we explore solutions and technologies that support a greener computing future. This list will continue to evolve as new techniques emerge daily to enhance AI energy efficiency and reduce the carbon footprint of computing.

Powering Data Centers with Renewable Energy and Carbon Removal Solutions

Shifting AI processing to data centres powered by renewable energy can be considered as the most critical step for a project like Stargate. In fact, to meet substantial energy requirements, several dedicated energy solutions are planned1 such as:

- Solar energy and battery storage: SB Energy (SoftBank) will develop solar energy systems with battery storage (own off-grid power using behind-the-meter solutions

- Small Modular Nuclear Reactors (SMRs): Stargate plans to deploy SMRs used for establishing a stable baseload power supply and minimizing transmission losses2

- Carbon Capture: The current energy infrastructure will continue to rely on natural gas as a primary component. However, the implementation of Carbon Capture, Utilization, and Storage (CCUS) systems enables direct carbon dioxide emission capture at the source while supporting global greenhouse gas emission reduction initiatives

In France, President Emmanuel Macron has announced a €109 billion investment in AI infrastructure to “Make France an AI powerhouse” – around five times less than Stargate, which is proportional to the population. Through this major investment plan, France aims to host key global infrastructure and help reduce the sector’s overall environmental footprint, thanks to its low-carbon energy mix3. In fact, France benefits from several key assets that make its territory attractive for the development of dedicated AI infrastructure:

- a plentiful supply of decarbonized energy – 95% of the electricity produced is already low-carbon, primarily from nuclear power – combined with competitive and stable electricity prices;

- a strategic geographical position, with two-thirds of the EU’s subsea cables landing in France;

- land well-suited for data center projects, including 35 pre-identified sites across mainland France.

Innovating towards More Sustainable Semiconductor Materials

While silicon has been the cornerstone of semiconductor technology for decades, it is now approaching its physical limitations. It falls short in delivering the power efficiency, thermal management, switching speed, and voltage tolerance that next-generation data centres demand.

That’s why the industry is exploring alternative materials like Gallium Nitride (GaN) and Silicon Carbide (SiC), which offer better performance in this area. GaN devices can provide 3x the power or charging speed of silicon4, achieve as much as 40% energy savings, and occupy roughly half the size and weight of their silicon counterparts. SiC, with its superior thermal conductivity and optimized performance at lower switching frequencies, is better suited for the high‑power, high‑voltage applications found at data‑center perimeters. As a result, a hybrid architecture—SiC handling incoming medium‑voltage conversion and GaN managing rack‑ and board‑level DC–DC stages—is rapidly becoming the industry standard.

Beyond performance, this shift offers significant environmental benefits: GaN manufacturing emits 10x less CO₂ than silicon production and replacing legacy silicon with high‑efficiency GaN in data‑center power systems could slash electricity consumption by up to 10 %, yielding a substantial reduction in global carbon emissions.

Reducing Data Footprint

Effective data management is all about strategically utilizing storage space. By reducing your data footprint, you can minimize the number of storage systems required – reducing both cost and environmental impact.

There are several ways to reduce your data footprint5:

- Data deduplication removes redundant copies of files, freeing up storage space.

- Data compression reduces the size of stored data by identifying patterns and replacing them with shorter codes.

- Data tiering relocates infrequently accessed data from high-performance, expensive storage to more cost-effective alternatives.

- Archiving moves rarely used data offline, keeping it accessible when needed without occupying primary storage.

Deduplication and compression significantly shrink your storage footprint while maintaining data integrity. By compressing data, you can store more within the same infrastructure, delaying the need for additional storage expansion. Additionally, compressing data before transmission optimizes network bandwidth, leading to faster transfers, reduced latency, and improved overall efficiency. Ultimately, these optimizations lower storage costs, enhance performance, and contribute to a more sustainable and cost-effective data center.

Data infrastructure technologies remain one our main investment focus at AVP. Notable companies is this space include established players such as Cribl or Collibra, as well as earlier-stage companies like Onum, a Spanish startup founded in 2022 that reduces data analytics spend by an average of 50% by eliminating incomplete and duplicate data.

Optimizing Hardware Infrastructure

Virtualization / Containerization

Another way to reduce carbon emissions is to shrink the hardware footprint required to run your systems by deploying virtualization and containerization technologies. This reduces the need for additional hardware and minimizes energy consumption.

- Virtualization lets multiple virtual machines (VMs) share a single physical server, dynamically allocating CPU, memory, and storage to each VM. This consolidation boosts efficiency, simplifies management, and scales more smoothly.

- Containerization takes efficiency further by packaging applications in lightweight, self‑contained environments. Because containers use far fewer resources than full VMs, you can run more of them on the same hardware, speeding up deployment, scaling, and infrastructure optimization.

To effectively manage containerized applications, orchestration platforms – most notably the open‑source standard Kubernetes – automate deployment, scaling, and monitoring to ensure seamless operation and optimal resource utilization. Because Kubernetes itself can be complex, it typically requires complementary tools to secure and manage cluster deployments. At AVP, we dedicate significant effort to evaluating SaaS solutions – such as the US‑based Rafay – that simplify and streamline sophisticated Kubernetes environments.

By adopting containerization, businesses can significantly reduce their carbon footprint while improving performance and scalability. This makes containerization a key technology in the push for sustainable computing and eco-friendly IT infrastructure.

Software-Defined Storage (SDS)

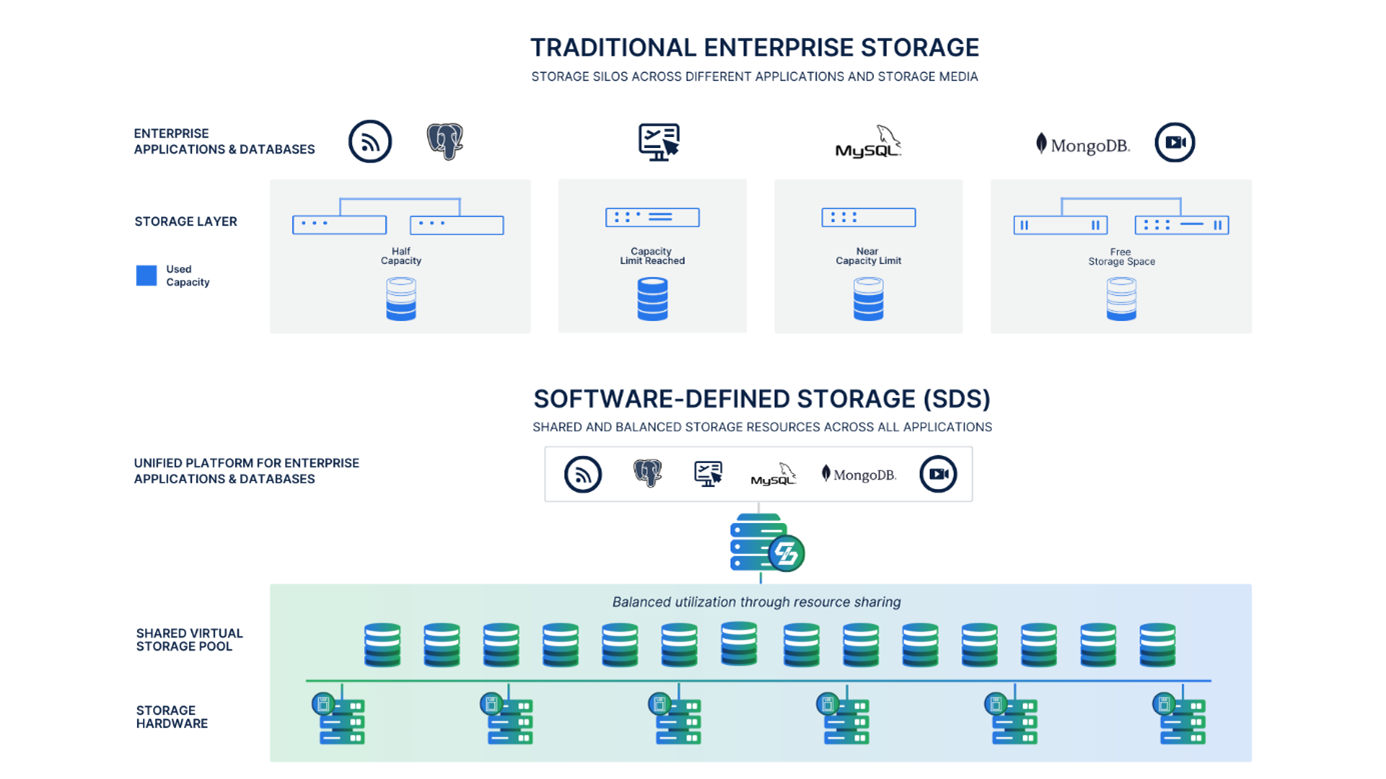

SDS is another technique used in data storage management separating the software that handles tasks like storing, protecting, and organizing data from the actual physical hardware. SDS solutions use a layer of software to hide the differences between hardware types, making everything work together smoothly. Unlike hypervisors, which split one server into many virtual machines, SDS brings together different storage devices into one system that’s easy to manage from a central place.

In the same manner Kubernetes does with compute, SDS dynamically provision, scale and manage storage. It can play a role in reducing energy consumption and a system’s carbon footprint, especially at scale, in several ways. First, it improves resource efficiency by pooling and optimizing storage, which reduces the need for excess hardware. SDS also extends the life of existing hardware by allowing older and newer devices to work together, helping to cut down on electronic waste. It intelligently places data based on how often it’s accessed, which ensures that energy-intensive storage is only used when necessary. SDS supports hybrid and cloud-based storage models, enabling organizations to shift data to more energy-efficient environments. SDS also allows hyperconverged deployments, where compute and storage share the same infrastructure for the highest resource efficiency—something traditional storage systems can’t support. Finally, its automation capabilities allow systems to scale only when needed and power down idle resources, saving even more energy.

Source: Simplyblock

A promising company in the space is Simplyblock, a Berlin-based company which develops the next generation software-defined storage by handling high-throughput workloads by dynamically balancing performance, latency, and cost.

Using GenAI Selectively and Purposefully

Businesses should thoughtfully assess which AI technique is most appropriate for a given task, rather than defaulting to generative models. While Generative AI is powerful – particularly for tasks like code generation, content creation, and research automation – it is also among the most energy-intensive forms of AI. In many cases, a simpler predictive model can deliver sufficient results with a far lower environmental footprint.

A promising approach to making GenAI more sustainable is through Edge GenAI, which deploys models directly on local devices such as smartphones, sensors, or industrial gateways. This reduces reliance on large, energy-hungry cloud data centers and minimizes the energy costs associated with transmitting data over networks. Because edge-deployed models are typically smaller and optimized, they enable faster, low-power processing and real-time inference. This is especially beneficial in sectors like smart agriculture, energy grids, and manufacturing, where local decision-making helps reduce waste and improve efficiency. Additionally, by extending the life of existing hardware and avoiding constant upgrades, Edge GenAI can help lower electronic waste and hardware-related emissions.

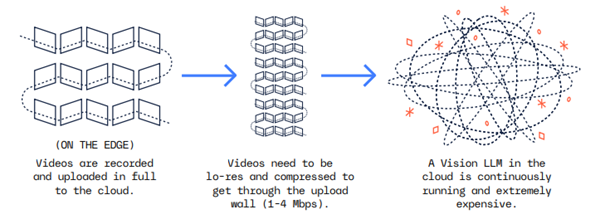

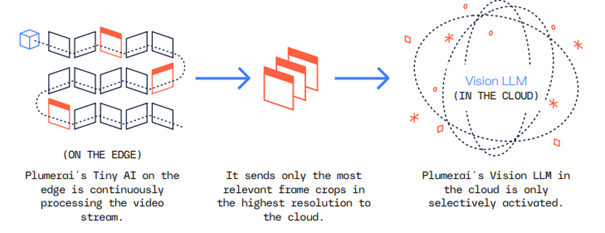

An example of innovation in this space is Plumerai, a company developing ultra-compact AI models for edge-based video intelligence. Their technology powers smart cameras in applications such as home security, enterprise monitoring, and retail analytics. By embedding intelligence directly into the device, Plumerai’s models reduce the need to constantly offload video data to the cloud, avoiding the energy costs of querying large vision models. Their system processes the video stream on-device, sending only the most relevant, high-resolution frame crops to the cloud for further analysis. The Plumerai Vision LLM, hosted in the cloud, is activated only when necessary, ensuring intelligent use of compute resources. This hybrid edge-cloud approach not only avoids continuous data transfer but also achieves high accuracy and ultra-fast performance, as the models operate on uncompressed local data.

Plumerai Tiny AI directing cloud-based Vision LLMs

Cloud-based intelligence video solutions:

vs. Plumerai’s solution (Tiny AI + Vision LLM):

Source: Plumerai

In short, by using GenAI selectively and shifting intelligence closer to where data is generated, Edge GenAI offers a concrete and efficient path to reducing AI’s environmental impact.

Conclusion

As we conclude this white paper, it’s essential to recognize that mitigating AI’s environmental impact requires exploring a diverse array of green computing technologies beyond those discussed herein. Among these, quantum computing stands out for its potential to revolutionize energy efficiency in computational processes.

Quantum systems can tackle complex optimization problems, such as energy grid management, climate modeling, and supply chain logistics, with significantly less energy than classical supercomputers. This efficiency arises from quantum computing’s ability to process vast combinations simultaneously, potentially reducing the carbon footprint associated with large-scale computations.

In alignment with this vision, AVP has invested in Alice & Bob, a French startup pioneering fault-tolerant quantum computing. Their innovative approach aims to develop the world’s first error-resistant quantum computer by 2030, unlocking energy-efficient architectures that minimize computational overhead. By supporting such advancements, we at AVP are committed to fostering technologies that not only propel AI forward but also ensure its sustainability for the planet.

Beyond environmental considerations, the geopolitical and strategic implications of initiatives like Stargate are significant – particularly concerning AI dominance and its tangible impact on defence and physical security. It’s almost certain that other global powers will respond, with similar initiatives likely to emerge in Europe and China.

Bibliography

- https://www.datacenterfrontier.com/machine-learning/article/55272808/from-billions-to-trillions-data-centers-new-scale-of-investment

- https://www.certrec.com/blog/energy-demands-for-openai-stargate-project/?utm_source=chatgpt.com

- https://www.elysee.fr/admin/upload/default/0001/17/d9c1462e7337d353f918aac7d654b896b77c5349.pdf

- https://navitassemi.com/sustainability-and-gan/

- Decarbonizing the Data Center for Dummies

About AVP

AVP is an independent global investment platform dedicated to high-growth, tech (from deep-tech to tech-enabled) companies across Europe and North America, managing more than €3bn of assets across four investment strategies: venture, early growth, growth and fund of funds. Our multi-stage platform combines global research with local execution to drive investment. Since its establishment in 2016, AVP has invested in more than 60 technology companies and in more than 60 funds with the Fund of Funds investment strategy. Beyond providing equity capital, our expansion team works closely with founders, providing the expertise, connections and resources needed to unlock growth opportunities, and create lasting value through meaningful collaborations.