Arthur Brasseur

Senior Associate

Isabel Young

Senior Associate

Augustin Collot

Analyst

Part 1

From Sci Fi Dreams to Real World Deployment

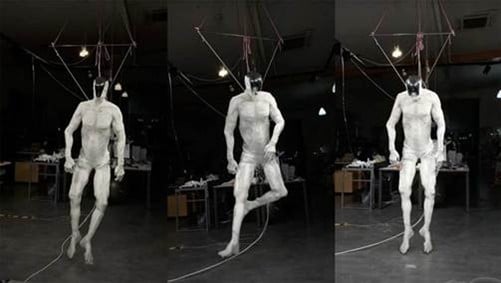

In February 2025, Clone Robotics unveiled something that felt straight out of Westworld: Protoclone, a humanoid robot with 206 bones, 1,000 synthetic muscles, and artificial ligaments — all designed to mimic human biomechanics. Unlike the stiff, mechanical robots of the past, Protoclone uses biomimetic actuators that replicate real muscle movement, enabling fluid, lifelike motion. It’s an engineering milestone and a signal that what was once science fiction is edging into reality.

But here’s the truth: we’re still early. Fully humanlike, general‑purpose robots are years away from mass deployment. Their capabilities remain limited.

The real revolution? It’s already underway — quietly reshaping manufacturing, healthcare, logistics, and construction with robots built for specific, high‑impact tasks.

At AVP, we have spent recent months deepening our understanding of this shift. Through conversations with founders and experts, and a deep dive into the evolving robotics stack, we have developed a perspective on where the sector is headed. And our conclusion is that we are convinced about the ability for verticalized, AI-enabled robotics to transform sectors like manufacturing and healthcare and believe they represent strong investment opportunities. With breakthroughs in data infrastructure, computer vision, foundational models, and edge AI, these systems are evolving from narrow tools into intelligent collaborators. As a result, robotics will not just augment human labour, it will set new standards.

Why Could This be Robotics' tipping point?

Over the past decades, robotics has evolved from rigid industrial machines to intelligent, adaptive systems:

- 1960s–1980s: Saw the birth of industrial robots and automation with Unimate debuting the first robot at General Motors.

- 1990s–2000s: Robots began leaving factory floors with Roomba entering homes and Honda’s ASIMO showcasing humanoid mobility.

- 2010s onward: AI, machine learning, and computer vision push robotics into new domains. Boston Dynamics introduced agile, bipedal robots like Atlas, and robots are now used widely in logistics, agriculture, and healthcare.

This historical progression has shaped the diversity of robot types we see today. In robotics, form naturally follows function: designs are optimized for the tasks they perform, much like software interfaces are tailored to specific use cases. As a result, robotic arms dominate in manufacturing, cobots are built to work safely alongside humans, AMRs support logistics, quadrupeds handle inspection, and service robots find applications in domestic or retail settings. At the frontier, humanoid robots aim to replicate human form and dexterity to operate in environments built for people, though this path remains early and capital intensive.

Yet despite this progress, robotics often fell short of its grand promise. Why?

- Robotics is fundamentally hard: it sits at the intersection of hardware and software, where progress in one is often constrained by the other. Hardware is costly, complex, and brittle – even small changes can throw off performance.

- Robotics suffers from the Moravec paradox: what’s easy for humans (like grabbing a ripe fruit) is extremely difficult for robots, while tasks that are computationally intensive (like playing chess) come far more naturally for them.

- On the software side, robots often lack the generality needed to operate in dynamic, unstructured environments. Most systems today are narrowly programmed for specific tasks and adapting them to new use cases remains a major challenge.

The result: impressive demos, slow real-world adoption.

Why This Time is Different

Today, a convergence of breakthroughs is pushing robotics past its historical limits.

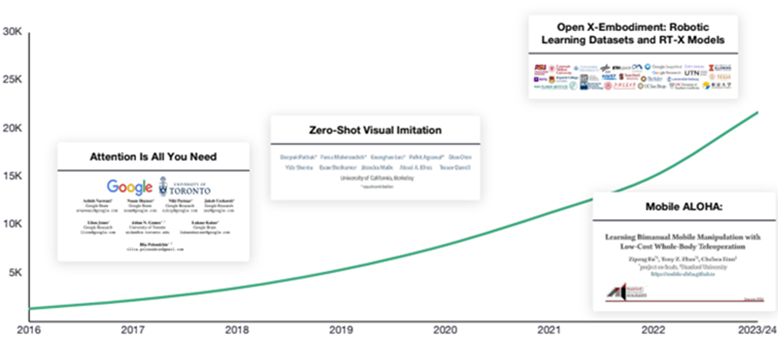

- Research boom: Publications jumped from under 2,000 in 2016 to over 20,000 in 2023. Academic talent is spinning out startups like Physical Intelligence (Berkeley/Stanford) and Cobot (MIT).

- Modular stacks: Open frameworks like ROS2, PX4, and Ardupilot are making it easier for startups to build on top of shared infrastructure. Instead of reinventing the wheel, teams can now focus on vertical applications.

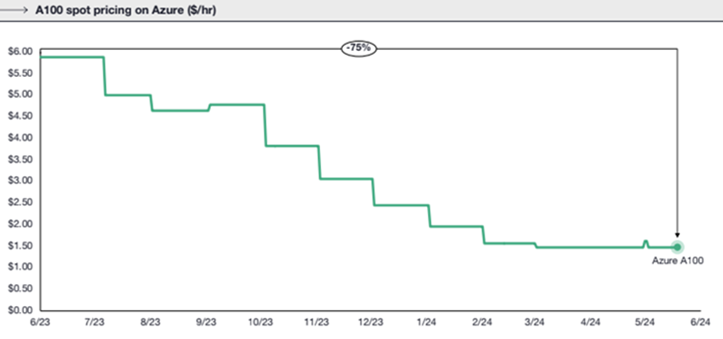

- Falling costs: The cost of training AI models has dropped significantly (GPU prices dropped ~75% from 2023–2024), making experimentation and iteration faster; and core components like, LiDAR sensors, batteries, and motors are far cheaper, and almost commodities.

Source: Evolution of GPU costs

- Smarter training: Through new approaches in teleoperation and simulation to AR-guided learning and video‑based training, robots are learning faster.

- Spatial AI models: Just as LLMs enable reasoning over text, spatial AI models are starting to enable reasoning in the physical world — enabling robots to understand objects, relationships, and environments in real time. Recent demonstrations, such as OpenAI’s robot hand solving a Rubik’s Cube, have shown that AI can generalize across physical tasks – leading many to believe a “ChatGPT moment” for robotics may be on the horizon.

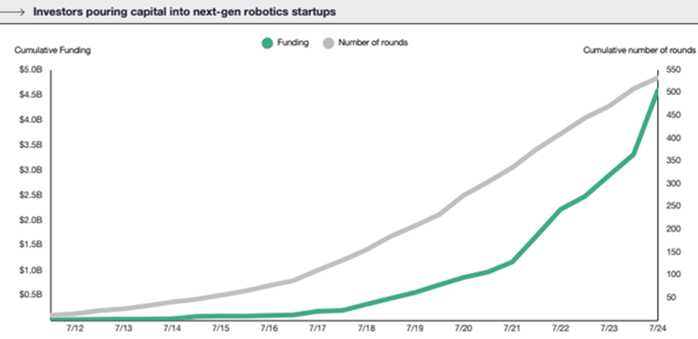

- Investment surge: Funding for robotics startups has spiked, reflecting growing investor conviction.

The Tipping Point

In short, while robotics has long struggled to break out of narrow use cases, a convergence of advances in AI, hardware, and software is beginning to shift to that reality. The field is gaining momentum, and early signs point to a new generation of robots that are more capable, adaptable, and scalable than ever before.

To better understand where this momentum is heading, our next blog in this three-part series will turn to the structure of the robotics market – examining emerging trends across key geographies.

If you can’t wait for part two, download the full whitepaper below.